In today’s data-driven world, efficiently analyzing and visualizing high-dimensional datasets is paramount. One of the most effective tools for this task is the self-organizing map (SOM), an unsupervised learning algorithm introduced by Teuvo Kohonen in the 1980s. SOMs are particularly valued for their ability to map complex, multi-dimensional data into simpler, low-dimensional representations while preserving the topological structure of the original data. This makes them a versatile tool in areas ranging from image processing to bioinformatics.

In this article, we will delve deeper into the workings of self-organizing maps, their characteristics, implementation, and applications across various fields. We will also explore their limitations and provide practical recommendations for optimizing their use.

Self Organizing maps – An introduction

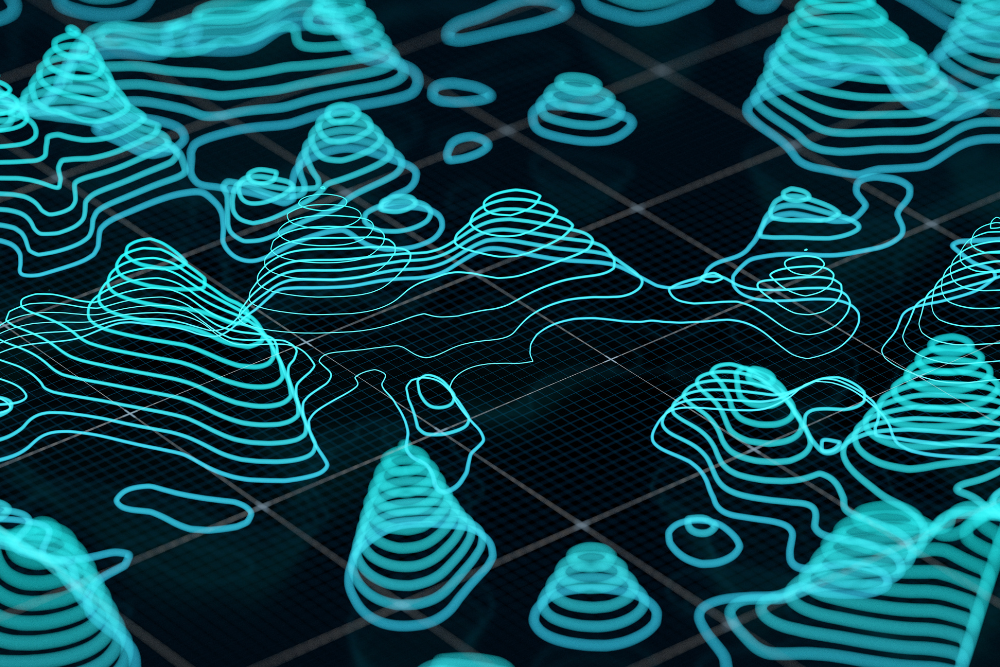

Self-organizing maps (SOMs), also known as Kohonen maps, are a type of artificial neural network that is trained using unsupervised learning to produce a low-dimensional (typically two-dimensional), discretized representation of the input space of the training samples, called a map. In simpler terms, SOMs are used to visualize high-dimensional data in a lower-dimensional space while preserving the topological relationships between the data points. This makes them a powerful tool for dimensionality reduction and data visualization.

How SOM Works?

A SOM consists of a grid of nodes, where each node is associated with a weight vector. The weight vectors are initialized randomly at the beginning of the training process. During training, the SOM learns to map the input data onto the grid in such a way that nearby nodes represent similar data points. This is achieved through a competitive learning process.

When a new data point is presented to the network, the SOM calculates the distance between the data point and each weight vector. The node with the weight vector that is closest to the data point is called the best matching unit (BMU). The BMU and its neighbors are then updated to be more similar to the input data. The extent to which the neighbors are updated is determined by the neighborhood function and the learning rate.

Characteristics of Self-Organizing Maps

- Topological ordering: SOMs preserve the topological relationships between the data points in the input space. This means that data points that are close together in the input space will also be mapped to nearby nodes in the SOM.

- Non-linear projection: SOMs can map high-dimensional data onto a low-dimensional space in a non-linear fashion.

- Unsupervised learning: SOMs do not require labeled data to train, making them a useful tool for exploratory data analysis.

- Visualization: SOMs can be used to create visual representations of high-dimensional data, making it easier to understand complex relationships between variables.

5 Stages in Self-Organizing Maps

- Initialization: The weight vectors of the SOM are initialized randomly.

- Competition: For each input data point, the BMU is found.

- Cooperation: The BMU and its neighbors are updated.

- Adaptation: The weight vectors of the BMU and its neighbors are adjusted to be more similar to the input data.

- Termination: The training process is terminated when a stopping criterion is met, such as a maximum number of iterations or a minimum change in the weight vectors.

How Do You Implement Self-Organizing Maps?

There are many software packages that can be used to implement SOMs, including:

- Python: The minisom package is a popular choice for implementing SOMs in Python.

- R: There are several R packages that can be used to implement SOMs, such as the kohonen package.

Applications of Self-Organizing Maps in Different Fields

Self-organizing maps have a wide range of applications across various fields. Here are a few examples:

- Image processing: SOMs can be used for image segmentation, feature extraction, and compression.

- Bioinformatics: SOMs can be used to analyze gene expression data and identify patterns in biological data.

- Finance: SOMs can be used for clustering financial data, detecting anomalies, and predicting market trends.

- Social sciences: SOMs can be used to analyze social networks, identify communities, and understand human behavior.

- Engineering: SOMs can be used for process control, fault detection, and pattern recognition.

Variants of Self-Organizing Maps

There are several variants of self-organizing maps, each with its own unique characteristics and applications. Some of the most common variants include:

- Growing Self-Organizing Maps (GSOMs): GSOMs can dynamically adjust their size during training, allowing them to adapt to the complexity of the data.

- Hierarchical Self-Organizing Maps (HSOMs): HSOMs consist of multiple layers of SOMs, allowing for hierarchical representation of data.

- Fuzzy Self-Organizing Maps (FSOMs): FSOMs use fuzzy logic to represent the degree to which a data point belongs to each node in the map.

Challenges and limitations of Self-Organizing Maps

While self-organizing maps are a powerful tool, they do have some limitations:

- Sensitivity to initialization: The initial weight vectors of the SOM can significantly affect the final result.

- Local minima: The training process can get stuck in local minima, leading to suboptimal results.

- Interpretation: Interpreting the results of a SOM can be challenging, especially for high-dimensional data.

- Computational cost: Training large SOMs can be computationally expensive.

Despite these limitations, self-organizing maps remain a valuable tool for data analysis and visualization. By understanding the strengths and weaknesses of SOMs, researchers and practitioners can effectively apply them to a wide range of applications.